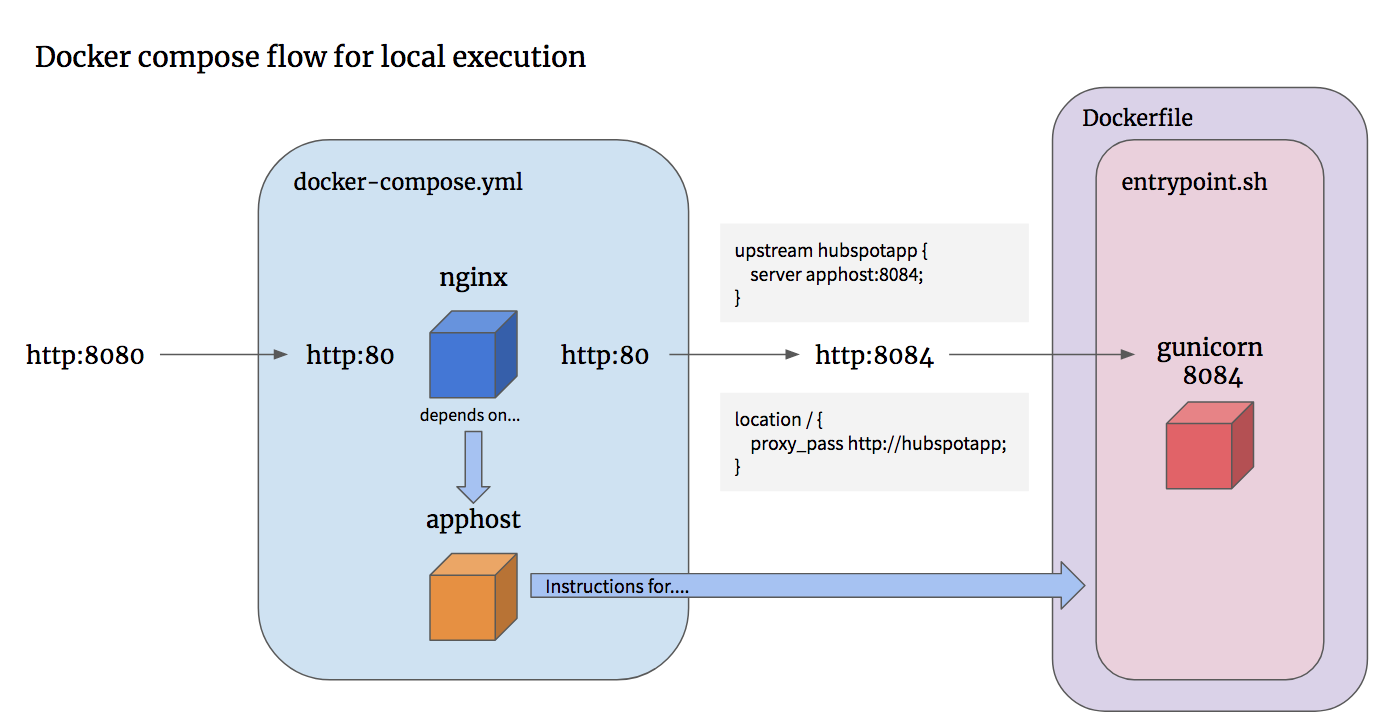

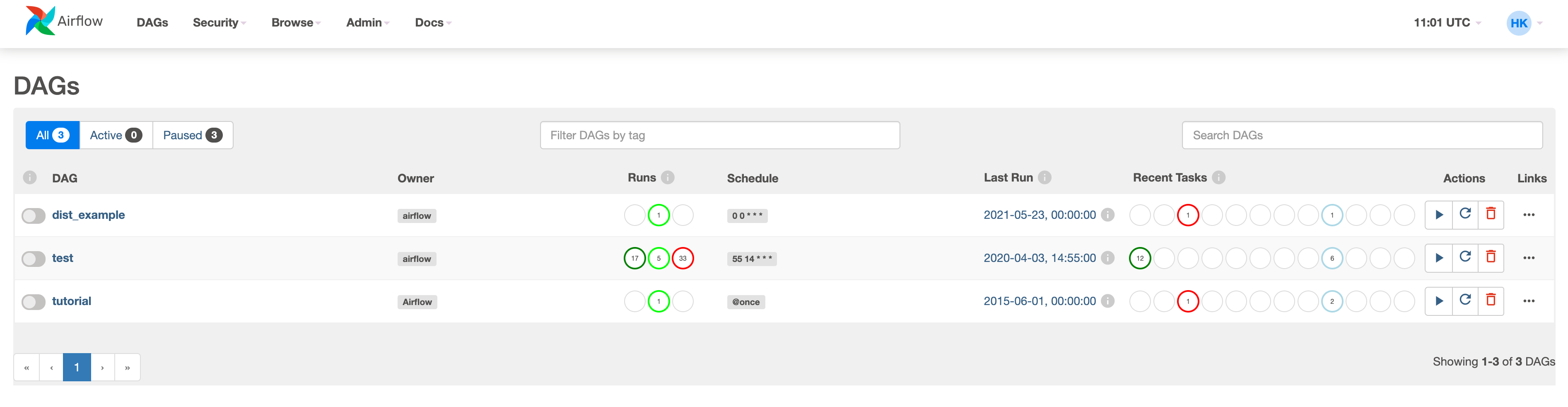

Using Python and Pandas, we will extract the data from a public repository and upload the raw data to a PostgreSQL database. The Walk-throughīefore we can do any transformation, we need to extract the data from a public repository. The DAG will be used to run the ETL pipeline in Airflow. We will need to extract the data from a public repository (for this post I went ahead and uploaded the data to and transform it into a format that can be used by ML algorithms (not part of this post), thereafter we will load both raw and transformed data into a PostgreSQL database running in a Docker container, then create a DAG that will run an ETL pipeline periodically. This dataset contains Wine Quality information and it is a result of chemical analysis of various wines grown in Portugal. The Howįor this post, we will be using the data from UC-Irvine machine learning recognition datasets. In this post, I will focus on how one can tediously build an ETL using Python, Docker, PostgreSQL and Airflow tools. There are a lot of different tools and frameworks that are used to build ETL pipelines. ETL pipelines are available to combat this by automating data collection and transformation so that analysts can use them for business insights. However, most of it is squandered because it is difficult to interpret due to it being tangled.

With smart devices, online communities, and E-Commerce, there is an abundance of raw, unfiltered data in today’s industry.

#Airflow docker compose how to#

This post will detail how to build an ETL (Extract, Transform and Load) using Python, Docker, PostgreSQL and Airflow. I will start with the basics of the ML stack and then move on to the more advanced topics. In this post, I want to share some insights about the foundational layers of the ML stack. How To Build An ETL Using Python, Docker, PostgreSQL And Airflowĭuring the past few years, I have developed an interest in Machine Learning but never wrote much about the topic.

0 kommentar(er)

0 kommentar(er)